In a recent workshop, Greg said that he had shared our board deck with Claude by Anthropic (aka AI). Our students were impressed by AI’s feedback, but they were more shocked that he had shared it at all. The deck contains confidential business data, which we’ve been warned not to share with AI.

For Section, sharing confidential data isn’t a huge deal. We’re a small startup and no one is watching us that closely. But if you’re one of our big enterprise partners – Bose, Comcast, L’Oreal, etc. – it’s clearly a dealbreaker.

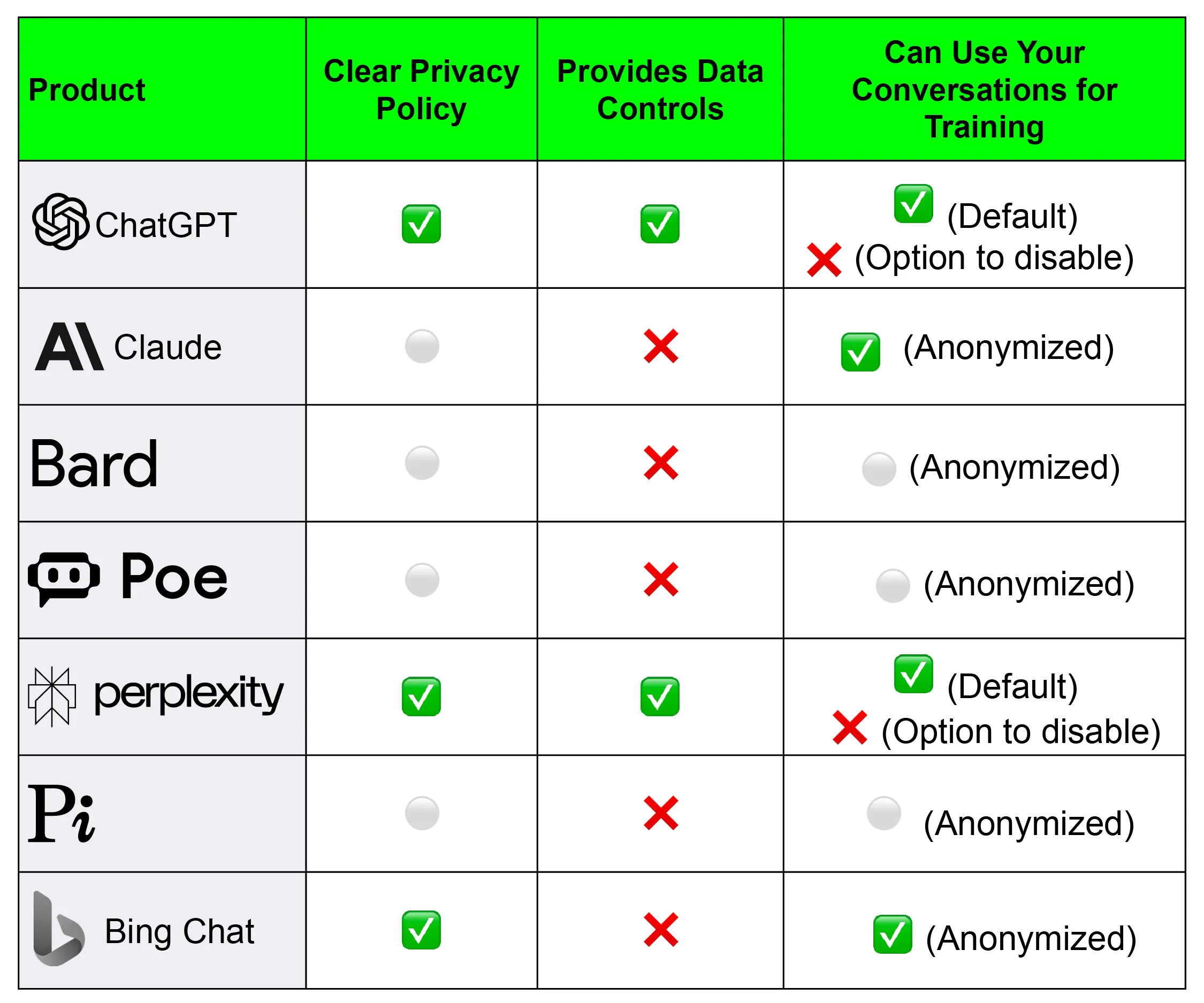

I hear concerns about AI’s privacy policies every day, so let’s dig in. Here’s our guide to how the most popular LLMs treat your data (as of October 2023).

Want to learn more about using AI for strategic, data-based decisions? Join my workshop in November. Use discount code PRIVACY for 20% off a workshop seat or STRATEGY for 25% off membership.

Which LLM is right for your privacy needs?

Updated as of publication (Oct. 2023)

Here’s the TL;DR

If you’re worried about data privacy, the best option is to use an enterprise grade solution like ChatGPT Enterprise or Bing Chat Enterprise. These solutions have robust data governance policies, clear guidelines for how the data is used, and default to not using any of your data to train their models.

However, if you’re like us and can’t get OpenAI’s (admittedly overrun) sales team to call you back, your best bet is ChatGPT or Perplexity.AI. Both give you the strongest control over your data and explicitly allow you to enable and disable AI’s ability to train its models on your data. There are downsides to disabling this feature (e.g., you can’t access beta features), but in exchange, you get maximum control.

What about all the other platforms?

Other platforms fall into one of two buckets:

- Clear, but non-controllable data policies

- Murky data policies

Claude and Bing Chat for Consumers have clear but non-controllable data policies. They don’t use your data for training by default, but if you give them feedback on responses, they take that as explicit permission to use your data. Both have “out of the box” privacy controls you cannot alter.

Poe, Bard, and Pi have murkier data policies and do not allow users to alter their data controls. All three use your anonymized data to some extent, but their data policies aren’t easy to understand. They don’t make clear how your data is being used.

Below is a side-by-side comparison and more details on each platform.

Updated as of publication (Oct. 2023)

✅ - Yes, the platform provides the feature or capability.

❌ - No, the platform does not have this feature or capability.

⚪ - Unclear or unknown, the platform does not have a clear policy.

Deep Dive Into Privacy Controls

ChatGPT by OpenAI

How to enable privacy controls: Navigate to ChatGPT’s Settings. Under Data Controls, enable or disable Chat history and training.

How the privacy controls work:

- When you disable Chat history and training, OpenAI will not use your conversations to train their models.

- New conversations will not be saved, so you are unable to see a history and continue your chats.

- Existing conversations will still be saved and may be used for model training if you have not opted out.

- Beta features like Browse with Bing, Advanced Data Analysis, Plugins, and DALL·E 3 will be disabled.

- Conversations are retained by OpenAI for 30 days to monitor for abuse, then permanently deleted.

Consideration for protecting your data:

- Privacy settings do not sync across browsers or devices. You will have to disable chat and history on each device/browser.

For more information: OpenAI’s Data Controls FAQ.

What about the enterprise version?

Privacy controls that restrict data usage are enabled by default in ChatGPT Enterprise, OpenAI's commercial product for businesses. Customer prompts, company data, and other inputs are not used for training or improving OpenAI's natural language models.

Companies retain full ownership and control over their data including inputs and outputs from ChatGPT conversations, within the boundaries of applicable laws. OpenAI agrees not to use customer data for model training or other purposes without explicit consent. For more information see OpenAI’s Enterprise Privacy page.

Claude by Anthropic

How to enable privacy controls: Privacy controls come “out of the box” and you can’t change them.

How the privacy controls work:

- Anthropic retains your prompts and outputs in the product to provide you with a consistent product experience over time.

- Anthropic automatically deletes prompts and outputs on the backend within 90 days of receipt or generation unless users and Anthropic agree otherwise.

- By default, the company does not use conversations with Claude to train their models.

Consideration for protecting your data:

- There are two instances when Anthropic may review and use conversation:

- Your conversations are flagged for Trust & Safety review. Anthropic may use or analyze them to improve their Acceptable Use Policy, including training models for use by Anthropic’s Trust and Safety team.

- You’ve explicitly given Anthropic permission by reporting the data to them (for example via the applications feedback mechanisms) or explicitly opting into training.

What about the enterprise version?

By default, Anthropic automatically deletes any prompts submitted to Claude and any responses generated after 28 days. The only exceptions are cases where Anthropic needs to retain data longer in order to enforce its Acceptable Use Policy (AUP) or comply with legal obligations.

For more information: Privacy and Legal FAQ and Anthropic’s Acceptable Use Policy.

Bard by Google

How to enable privacy controls: Privacy controls come “out of the box” and you can’t change them.

How the privacy controls work: Bard uses information from chats to improve their models in the following ways:

- Google anonymizes a portion of conversations by using automated systems to redact personal information like emails and phone numbers (though company names and data likely remain). These anonymized samples are then manually reviewed by Google employees who have been trained for this task.

- When users interact with Bard, Google collects their conversations, location, feedback, and usage information.

Consideration for protecting your data:

- Google advises users not to enter confidential information in their Bard conversations, including any data they wouldn’t want a reviewer to see or Google to use to improve their products, services, and machine-learning technologies.

For more information: Bard Privacy Hub

Poe by Quora

How to enable privacy controls: Privacy controls come “out of the box” and you can’t change them.

How the privacy controls work:

- Unlike the other LLMs in this guide, Poe (by Quora) provides access to LLM models like Claude, OpenAI’s GPT-3.5 and GPT-4 models, as well as models from Meta and Google.

- Based on this information, it is likely that data sharing depends on the model and provider.

- However, Poe's Terms of Service state that your account information is anonymized before being shared with third-party LLM providers and developers.

- These third parties may receive details about your interactions with bots on Poe, including the contents of your chats, to provide responses and to generally improve their services.

Consideration for protecting your data:

- While your anonymized information may be shared with third-party model providers, Poe advises against sharing any sensitive personal information with the bots.

For more information: Poe’s Privacy Policy and Terms of Service.

Perplexity.AI

How to enable privacy controls: AI data usage can be switched off in the account settings. Go to your profile and navigate to AI Data Usage toggle under Settings. Turn off the toggle to stop Perplexity from using your search data to improve our AI models.

How the privacy controls work:

- Perplexity offers consumers a standard and Pro version with the same data control features for privacy.

- With the setting AI Data Usage off, Perplexity.AI does not utilize the data provided to and generated by their application for training their models or enhancing their services.

- Perplexity also offers an API called pplx-api, which provides the same data control capabilities as the main Perplexity product. By default, Perplexity.AI does not utilize the data provided to and generated by their API for the purpose of training their models or enhancing their services.

- In all cases, Perplexity.AI retains users' personal information for as long as their account is active. If a user deletes their account, their personal information will be deleted from the company's servers within 30 days.

For more information: Perplexity’s privacy blog and Perplexity’s API privacy policy

Pi by Inflection

How to enable privacy controls: Privacy controls come “out of the box” and you can’t change them.

How the privacy controls work:

- Inflection de-identifies user user data by removing names, phone numbers, email addresses, and other identifiers from logs before giving them to their model to learn from.

- Inflection AI retains all of the information it collects or that users submit for as long as the user's account is active, or as needed to provide services.

- To delete your account, you may request that Inflection delete your account by contacting them using the How to Contact Us page.

For more information: Inflection’s Privacy Policy.

Bing Chat by Microsoft

How to enable privacy controls: Privacy controls come “out of the box” and you can’t change them.

How the privacy controls work:

- Microsoft collects conversation and usage data, but does not use the prompts or conversations to train their models by default.

- If a user provides feedback on Bing through the interface, Microsoft will automatically and manually review the related prompts, similar to Anthropic's practices.

For more information: Microsoft’s Privacy Statement.

What about the enterprise version?Bing Chat Enterprise does not have any end-user privacy settings or chat history capability. All data privacy controls are managed solely at the enterprise organization level. For more information see Microsoft’s Bing Chat Enterprise Privacy page.